Kubernetes are amazing, very powerful tool and even small details are important. Of course, in complex solutions we always use YAML files to control our cluster, but for small tests, projects and changes we can also use just some commands. In this short post I would like to describe how to expose our app using services. Thanks to this solution pods inside cluster will be able to communicate it other, also we will be able to reach them.

If we work with Kubernetes, we probably deploy some application, like web apps. They can do a lot inside our cluster, but also in most of cases, we would like to access them also outside cluster, just for our users or different reasons. In default, they are not exposed, not available not only for users, but also even for other resources within our cluster. We will test how to resolve that in a very simple way.

Table of Contents

Simple Nginx Deployment

Maybe let’s start using just simple Nginx image for deployment:

kubectl create deployment nginx --image=nginxkubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-s58qh 1/1 Running 0 113sAfter this operation Nginx web server will work inside our cluster. But the thing is, it will not be available outside the world. Even worse, it is not visible for other items inside cluster by any friendly name. If we will want to get data from port 80, we can of course get pod IP, but want if it will be recreated? What if we will want to scale Nginx up or down? Then that IP will be useless because stuff will change too often and every time, we will need to reconfigure other parts of our system. It’s not a solution and conclusion is simple, we need different method to handle this problem.

Exposing Deployment

To expose our pods, services, we need to create service. Thanks to that, they will be visible for other pods using just simple naming like “our-service”, rest is handled totally automatically by Kubernetes, and we do not need to modify all details:

kubectl expose deployment nginx --port=80Simple, clean and after our Nginx will be available for other items inside cluster by just using Nginx name. Under the hood, Kubernetes will use built-in ClusterIP service to handle all requested from other cluster resources. What about scaling? It will not be problem anymore also. We will use name of service, so number of pods inside does not matter, Kubernetes will just balance everything automatically because ClusterIP also does load-balancing.

Let’s check our new service by getting all services:

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12d

nginx ClusterIP 10.110.131.246 <none> 80/TCP 32sBut wait a moment… if we will try to open port 80 in our web browser, we will not see any results. So… what’s wrong? Nothing. It works fine, but ClusterIP does not provide exposing outside the cluster.

Exposing using NodePort

To do something like that, we need to expose using different service type – NodePort. This type will use different port in our machine to expose port 80 (or other, selected by us) from deployment level. In default services are not exposed outside cluster and it makes a lot of sense, even from security perspective. First, we need to remove previously added service:

kubectl delete service nginxRemember: it will remove service, not deployment. We can use the same name for different resources without any issues. Right now, we can expose our deployment again, this time by using different type:

kubectl expose deployment nginx --type="NodePort" --port=80It does not mean we will have port 80 exposed outside. New service will use 80 for Nginx, but automatically selects one of port and assign to this service. We can check that by again list services:

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12d

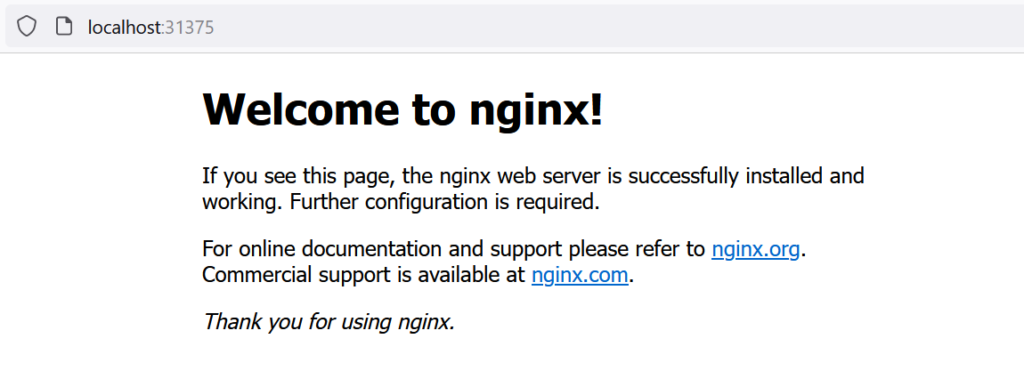

nginx NodePort 10.107.60.35 <none> 80:31375/TCP 5sAs we can see, right now port 80 from Nginx deployment is linked to port 31375 on cluster level. We can check that using web browser:

Voilà! Our service works correctly, Nginx is accessible, and we can use it. When use which method? It depends on the scenario of course. Let’s assume we will add PHP as API linked to Nginx – it does not have to be exposed outside Cluster, we just need communication between Nginx and PHP pods (so ClusterIP will be fine). If we will add other background resources like even database, it will be the same. We do not need to expose it, there is no reason for that. Entry point for users in this scenario is Nginx and it should be exposed.